Versioning a shared dataset using DVC and S3

The reality of data management in small to medium size labs

Properly managing vast amounts of data is critical to tackling machine learning problems. Companies that use machine learning in production leverage concepts such as MLOps and MLSys to manage their data.

On the other hand, what about university laboratories? Unlike companies, university labs are often unable to allocate their human resources to manage their infrastructure and data. In particular, small and medium-sized labs have no choice but to maintain their data in an ad-hoc manner. In such cases, data management needs to be as simple and easy as possible.

This article introduces an attempt to solve the data management problem of lab servers using Data Version Control (DVC) and Amazon S3. We aim to realize systematic data management by a small number of administrators. The technology introduced here is still in the trial-and-error stage. If you have any suggestions for improvement, please contact Matsui.

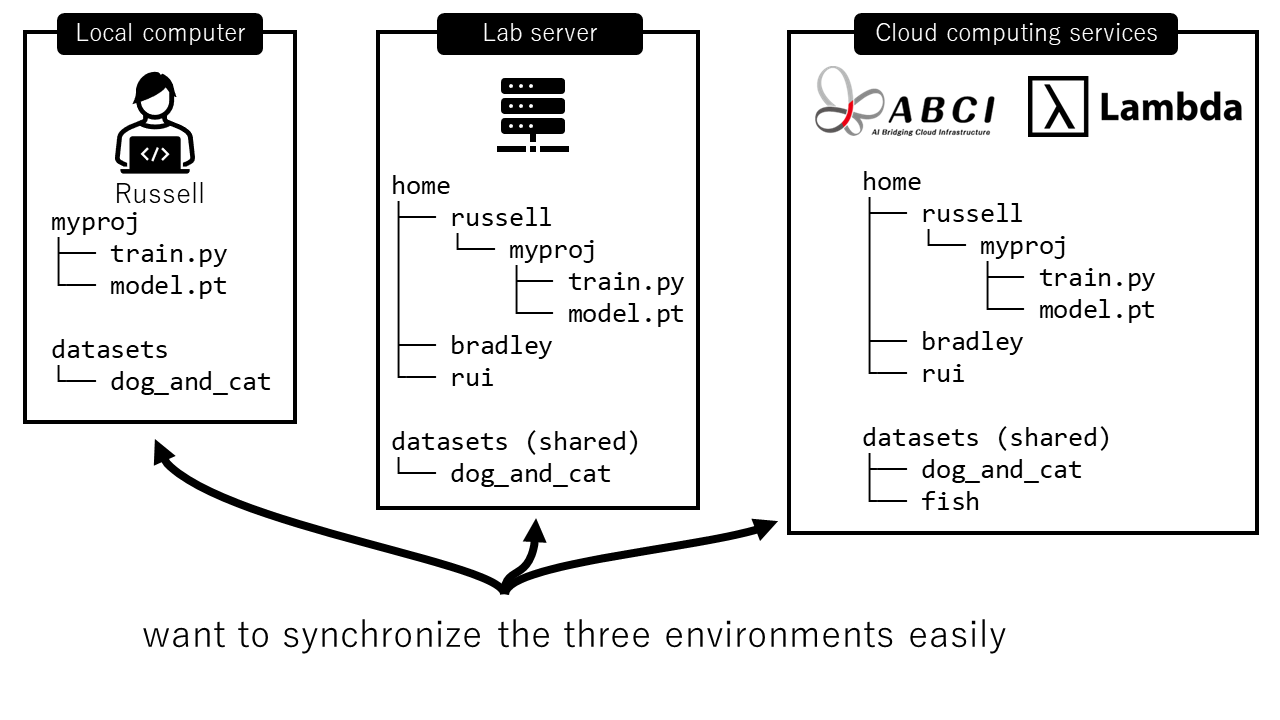

Let’s look at a typical configuration as follows.

Here we have the following three environments.

- Local machine

- Each student (in this case, Russell) has his laptop.

- The research environment is also created on this PC for simple experiments and prototyping.

- Datasets for the experiments are downloaded.

- Lab server

- There is an on-premise shared server managed by the lab.

- Since it is a shared server, there is a space for each student under the home directory.

- Students run their experiments on this server.

- The datasets are placed in the shared area because other members of the lab also use them.

- Cloud server

- For large-scale experiments, students will use servers in the cloud. These include AIST’s ABCI and Lambda GPU Cloud.

- On these servers, the same environment as the lab server is built.

What we want to do here is to synchronize and version the three environments as much as possible. And we aim to make it as easy as possible for both administrators and users to do so. For example, a user may want to use a model trained on a cloud server to perform inference on a lab server, and then analyze the output on a machine at hand. In such a case, we need to build a mechanism to ensure synchronization between machines.

Here, there are three types of data to consider. We will consider how to manage these.

| data type | who manages it | data size | example |

|---|---|---|---|

| source code | each user | small | train.py |

| data of user, such as model after training | each user | large | model.pt |

| dataset | administrator | large | dog_and_cat directory |

A typical configuration

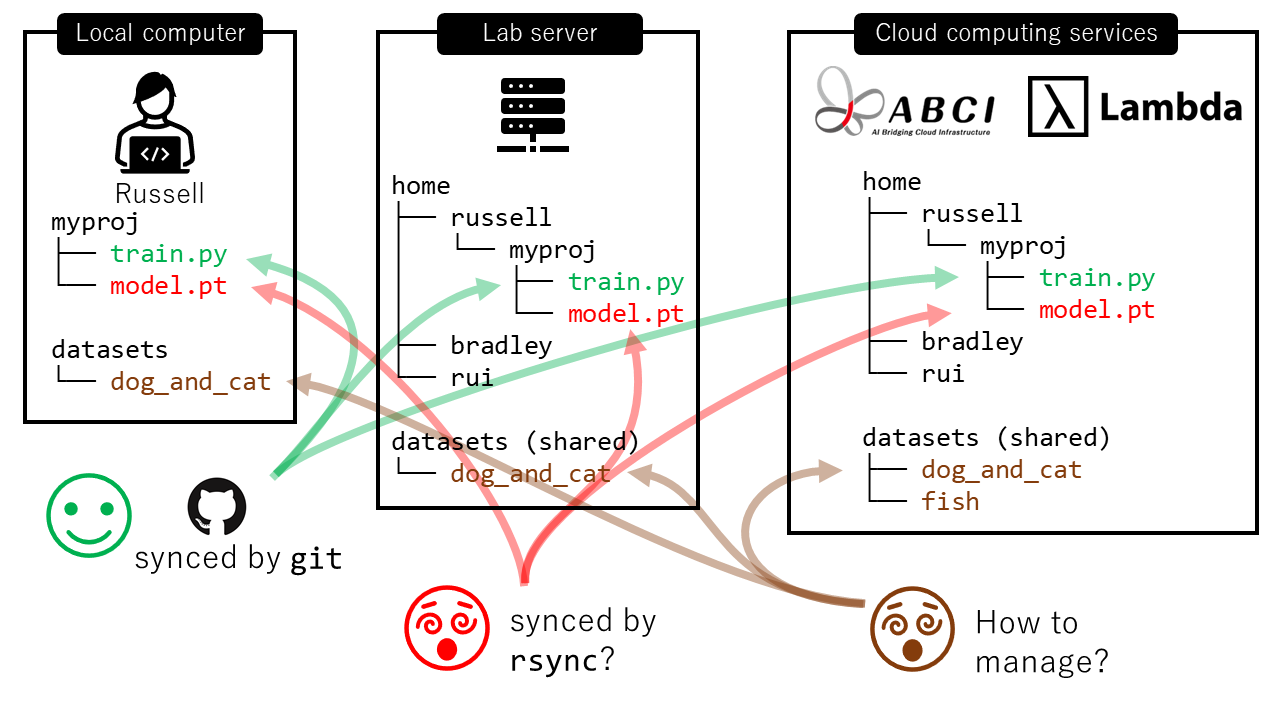

A typical solution is as follows.

- Source code (

train.py)- Each student can manage their source code by

gitwith GitHub/Bitbucket/GitLab. There’s no problem.

- Each student can manage their source code by

- User data (

model.pt)- Huge binary data causes a problem. Managing such big data with

gitis prohibited. - If you have only two environments, you can manage the data using

rsyncwith one of them as the master. However, if you have three or more environments, managing them withrsyncwould become very complicated.

- Huge binary data causes a problem. Managing such big data with

- Datasets (

dog_and_cat)- Datasets are hard to manage. Suppose that the administrator downloaded a dataset called

fishto the cloud server at a user’s request. How should this dataset be synchronized with the lab server? It would be tedious for the administrator to performrsyncmany times manually. - The typical way is to download data only when and where it is required (on an ad-hoc basis!) Such management is dangerous because one cannot verify if the datasets in different environments are really the same. For example, the

dog_and_catdataset on the cloud server may be “ver 3”, downloaded this year. However, thedog_and_catdataset on the lab server may be “ver 1” downloaded last year. In that case, the experiment results on the cloud server and those on the lab server will not match even if the same algorithm is applied. This kind of difference is hard to detect.

- Datasets are hard to manage. Suppose that the administrator downloaded a dataset called

Proposed approach: Data synchronization with DVC and S3

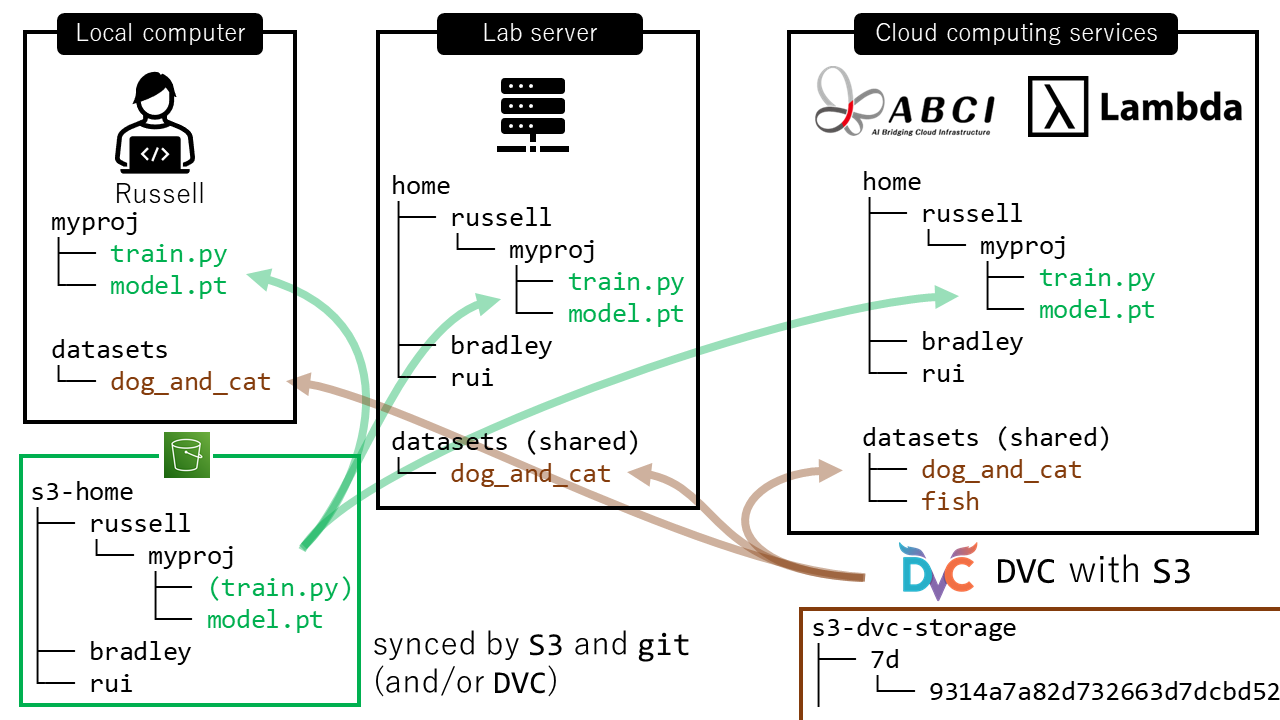

In contrast, the following is an example of the proposed configuration using DVC and S3.

Let’s assume that the administrator has already signed up with AWS, and each student has access to Amazon S3. Note that Amazon S3 is the most basic storage service on AWS. In a nutshell, it is a network hard-disk by AWS.

The administrator creates a space for each student on S3 in advance, i.e., a home-like area. Each student has appropriate access control for their area. The green s3-home area at the bottom left in the above figure visualizes the space. This S3 space is the master area for each student.

Under this configuration, the data will be managed in two ways as follows.

- Source code (

train.py) and user’s data (model.pt)- Again, each student can manage their source code with

git. - The user data will be handled by Amazon S3.

- Each user can freely upload data to their S3 area and download it when required.

- In this way, students can synchronize their data by simply using network storage as the master space. Here students can synchronize the source code as well if they need it. The usage of the area is left to the user.

- If a student is an expert, he/she can use

DVCfor this personal area management as well.

- Again, each student can manage their source code with

- Datasets (

dog_and_cat)- Unlike the user area, we want to manage the shared dataset more accurately and systematically.

- Here, we will leverage Data Version Control (DVC). DVC achieves a “version control over data”. We will use

dvc, a lightweight command-line tool, to manage the data. - The data entity is placed on S3, which is drawn in the above figure as

s3-dvc-storagesurrounded by the brown frame in the lower right. The data to be shared is renamed to md5sum hash value and stored. - The

dvcallows us to synchronize the dataset between the three environments.

Now, let’s take a look at each of the above two methods.

User area management (S3)

Each student can freely manage their area. By using various commands of awscli, students can easily upload and download files. For example, the following command syncs the local directory contents to S3 (sync to).

$ aws s3 sync . s3://YOUR-LAB-s3-home/russell/myproj --delete # sync to

Conversely, if a student wants to sync the contents of S3 to their local side (sync from), the following command will do the job.

$ aws s3 sync s3://YOUR-LAB-s3-home/russell/myproj . --delete # sync from

The students can also use aws extension of vscode to upload and download data with GUI.

Managing shared datasets (DVC + S3)

Now, let’s take a closer look at how to create datasets.

The point is that we will express datasets as md5sum hash values and version control only them with git.

We will store the dataset itself in external storage such as S3.

Now let’s create the actual repository. We will manage the datasets in a single git repository.

That repository should be readable and writable by any lab member.

In this section, we will create such datasets from scratch.

Initial Setup

First, create an empty repository called datasets on the lab GitHub organization. Then, the administrator runs the following command.

$ git clone https://github.com/YOUR_ORGANIZATION/datasets.git

$ cd datasets

$ pip install dvc boto3 # Install dependencies

Next, the administrator initializes dvc on this directory.

$ dvc init # Initialize DVC.

$ dvc remote add -d s3_storage s3://YOUR-LAB-s3-dvc-storage # Specify the S3 URL to store the data.

Here, s3://YOUR-LAB-s3-dvc-storage is the S3 area for the storage of the dataset. The administrator should prepare this in advance.

Then, the directory structure will look like the following.

$ tree -a -L 2

.

├── .dvc

│ ├── .gitignore

│ ├── config

│ ├── plots

│ └── tmp

├── .dvcignore

└── .git

Here, the .dvc directory contains the configuration files for dvc. The .dvc/config file contains the path to the S3 area that we specified.

$ cat .dvc/config

[core]

remote = s3_storage

['remote "s3_storage"']

url = s3://YOUR-LAB-s3-dvc-storage

Let’s update the repository once at this stage. This update completes the initial setup.

git add .dvc # Version control the .dvc itself

git commit -m "Initialize DVC"

git push origin main

Add a dataset

Now, let’s add a dataset. Here, the administrator will add a dog_and_cat directory that contains six images.

The directory will look like this

$ tree -a -L 2

.

├── .dvc

├── .dvcignore

├── .git

└── dog_and_cat

├── 0000.jpg

├── 0001.jpg

├── 0002.jpg

├── 0003.jpg

├── 0004.jpg

└── 0005.jpg

The administrator wants to version all of these images, but managing binary files with git is not recommended.

So let’s use the dvc command.

$ dvc add dog_and_cat

The above command will create a file named dog_and_cat.dvc.

It contains a md5sum hash value of the dog_and_cat directory.

$ tree -a -L 2

.

├── .dvc

├── .dvcignore

├── .git

├── .gitignore

├── dog_and_cat

│ ├── 0000.jpg

│ ├── 0001.jpg

│ ├── 0002.jpg

│ ├── 0003.jpg

│ ├── 0004.jpg

│ └── 0005.jpg

└── dog_and_cat.dvc # This is just a file

$ cat dog_and_cat.dvc

- md5: 8d52124bbbf2371ebfd8e636038431ca.dir

size: 404150

nfiles: 6

path: dog_and_cat

Also, .gitignore will be updated such that dog_and_cat itself should be ignored by git.

$ cat .gitignore

/dog_and_cat

Now the administrator manages the above two files with git.

$ git add dog_and_cat .gitignore

$ git commit -m "Added dog_and_cat dataset"

$ git push origin main

Here, from the git side, only the md5sum values of the dataset will be versioned as a text string.

Whenever the dataset is updated or added in the future, only the md5sum is managed by git, as shown above.

Now then, what about the data itself? Here, the administrator will transfer the data to S3 by dvc push.

$ dvc push

Now, the data itself is stored in S3. Let’s check this content.

$ aws s3 ls s3://YOUR-LAB-s3-dvc-storage

PRE 6b/

PRE 8d/

PRE 94/

PRE a7/

PRE cc/

PRE d3/

PRE f9/

As you can see, the data has been renamed to md5sum values and saved.

If the administrator wants to add a new dataset, he/she can use the same method as above to create a directory such as fish.

Use datasets in another environment.

What should we do if we want to use the above datasets in another environment?

Here, we will assume that a student will use the above dataset on her local laptop.

Let’s move to a working directory and git clone the datasets directory.

$ cd SOMEWHERE

$ git clone https://github.com/YOUR_ORGANIZATION/datasets.git

$ cd datasets

$ tree -a -L 1

.

├── .dvc

├── .dvcignore

├── .git

├── .gitignore

└── dog_and_cat.dvc

As you can see here, the repository includes the dog_and_cat.dvc file.

On the other hand, the file itself (dog_and_cat directory) is not included in the repository. Now, to pull the dog_and_cat directory from S3, you can run the following. The following command will synchronize the complete data set.

$ dvc pull dog_and_cat # Pull the dataset itself from S3

$ tree -a -L 1

.

├── .dvc

├── .dvcignore

├── .git

├── .gitignore

├── dog_and_cat

└── dog_and_cat.dvc

It’s done! Now you can properly version huge files using only the command line tools git and dvc.

This method is safer than the ad-hoc rsync for datasets because a git repository manages it.

It also guarantees that the data will be the same across the environments.

Also, administrators can use the above method to manage datasets on both lab servers and cloud computers.

That is, by pulling the datasets repository in a shared space, the data can be managed and synchronized in a git managed manner.

The above strategy is a combination of the official DVC use cases: Sharing Data and Model Files and Data Registries.

Discussions

- Why S3? What about other AWS services?

- Because S3 is the easiest to use. If there exist better and easier options, please let me know! FSx for Lustre?

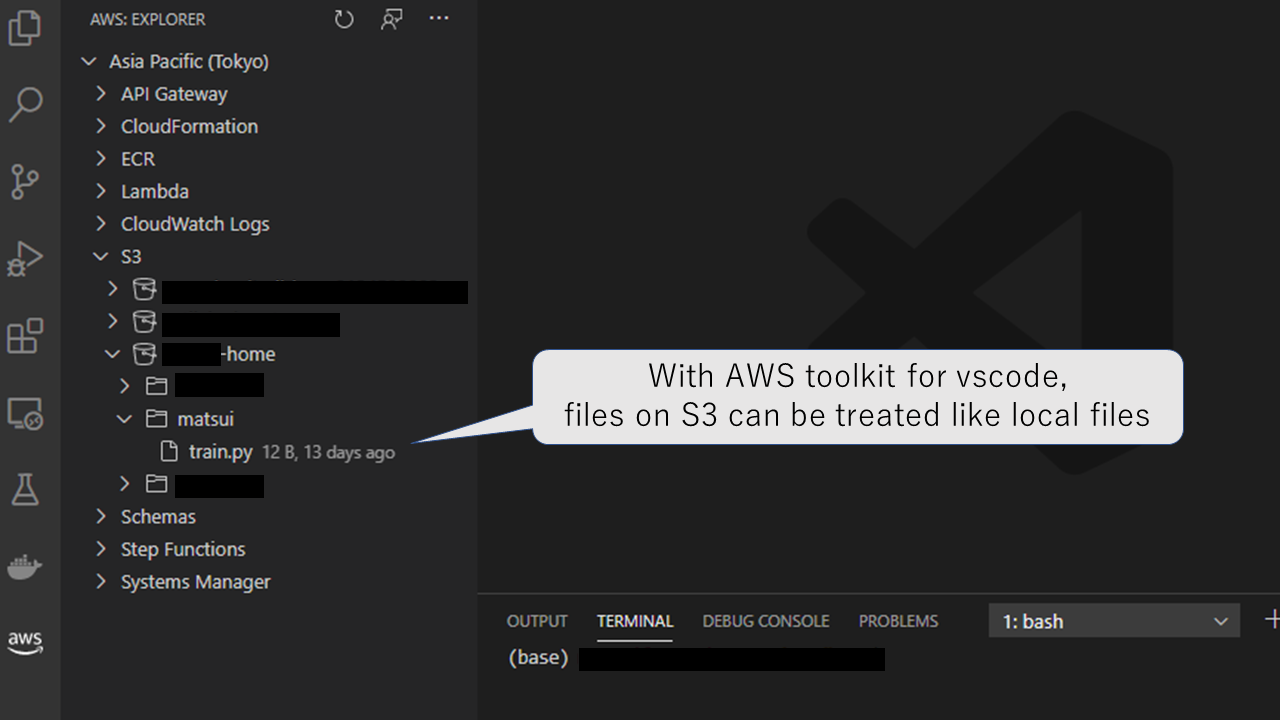

- From a practical standpoint, S3 has the following advantages: (1) almost no setup is required, (2) can be operated with a single

awsclicommand, and (3) users can view files on S3 directly via vscode, so S3 seems a good enough option at the current stage.

- How about the storage cost of S3?

- In the Tokyo region (all prices below assume Tokyo region), the first 50 TB/month is 0.025 USD/GB. Therefore, it is 25 USD/month per 1TB. Whether you think this is expensive or cheap depends on your situation, but it certainly makes you feel much better than maintaining an on-premis HDD in the lab.

- Also, using a mechanism called S3 Intelligent-Tiering, unused data is automatically stored at a low cost. The cheapest plan (S3 Glacier Deep Archive) costs 0.002 USD/GB per month, which is 2 USD/month per 1TB (less than 1/10 of the price of regular S3). This cost is quite cheap.

- What about the cost of data transfer?

- Uploading data to S3 is free.

- It costs 0.114 USD/GB to retrieve the data from S3. This cost is not cheap; if you want to pull a 500GB dataset, it alone will cost you 57 USD.

- This is the weakest point of the system we are proposing. If you know a way to make the data retrieval cheaper, please let me know.

- What about Google Cloud Storage (GCS) or other similar services?

- GCS is also a good choice. GCS can also be used as a backend of DVC. Note that GCS does not have a mechanism like the S3 Intelligent-Tiering mentioned above.

- In fact, ABCI’s Cloud Storage (an S3-compatible system provided by ABCI) is the cheapest: 6 USD/month per 1TB. However, I was a little afraid to depend on ABCI for my master data, so I chose AWS this time.

- Is it hard to set up AWS?

- It isn’t easy… The administrator needs to know about AWS; for example, he/she has to set up the appropriate IAM role for each student. It is also necessary to distribute secret access keys to each student.

- Some may argue that we started this project to manage the lab environment efficiently, but it is not worth it if it is too hard to set up AWS. However, only the administrator has to do it, and the students only have to learn the usage of

awscli. And the advantage of using an AWS-based system compared to maintaining an on-premise system is that the lab members don’t need to manage physical infrastructure at all (AWS is a cloud service!). This advantage is tremendous. - Also, while knowledge of AWS is required, the other side of the coin is that only the PI (or a tiny number of administrators) needs to know how it works.

- One more thing. While the initial setup of AWS is complicated, once it is achieved, the data management itself with DVC + S3 is surprisingly easy.

- Can DVC be used for user data management?

- Yes, it can.

- What about other candidates besides DVC?

- As far as I have surveyed, DVC seems to be the best in providing the simplest “git of data”. Other mechanisms that provide model management in the context of machine learning include Pachyderm and mlflow. While they are more sophisticated, they also seem to be more complex. The only one that is easier than DVC is Git-LFS, but Git-LFS does not seem suitable for machine learning workflows due to the lack of flexibility in configuring the cloud storage behind it. The Service Comparison Page is a good reference.

LabOps

We will define LabOps as a system of technologies that facilitates research in a laboratory. It is the university laboratory version of DevOps and MLOps. We are planning to publish more articles on LabOps in the future.